Posted on 21 Nisan 2011

Hacking Asterisks for We Are Forests

One Friday night at an art exhibition opening at Arti. Luis Fernandez came up to me saying “Hey, you know something about asterisk, right?” – not something a random art exhibition visitor asks you – and proceeded to introduce me to Duncan. This opening line immediately got my attention, and Duncan proceeded to tell about his project.

We Are Forests is a new mobile sound work being developed by Emilie Grenier and Duncan Speakman, and is the first collaboration of these two artists, who both have a history of making sound-based works. With Duncan's work rooted into scripted, narrative performances and Emilie more into user-generated content, We Are Forests combines these two approaches in a sound performance of about 30 minutes for 10-20 active participants.

Project description

The idea behind We Are Forests is that participants gather at the place in the city the particular instance of the performance is about. They leave their phone number and at a certain point they all get a phone call. From that moment on, the performance has started.

Every participant hears pieces of location-specific pre-recorded text, music and atmospherical sounds, while they wander seemingly at random around the place, e.g. a market hall. At any point in time, participants can choose to leave their own recording, it being a thought entering their head, or a particular sound they hear. The recording is then shared with all the other participants.

Meanwhile, the performance “conductor” sits behind a laptop, monitoring the perfomance via a web interface. The conductor can insert more pre-recorded messages, instructions etc. in to the participant's streams when he feels the time is there. Besides this, the conductor also has a “live phone”, which can be used to stream live audio to the participants, without it first needing to be recorded.

The performance ends after about 20 minutes, when the participants are instructed to return to the place they started, and when arriving there, to open a piece of paper in their pockets. The paper reads: “hang up the phone”.

I created the software for the first version while Duncan and Emilie were staying in Amsterdam, and the first performance was on the Spui square with about 10 participants. It worked wonderfully, although at the end of the performance it crashed due to a missing audio file. However the test was successful and the artists decided to keep working with me to improve the software while they were in Budapest.

How it works

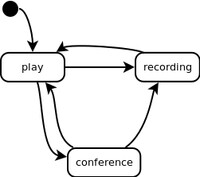

When a caller connects to the system, either by dialing in or by the system dialing them, the application creates a “session” for the channel that has connected. This session has its own state, which is shown below:

The “play” state means that the user is listening to a recording (either pre-recorded or a recording by another user). He starts in that state, because when dialed in, the first thing you always hear is the introduction sample, explaining the performance's working and setting the atmosphere.

Every caller-session has a queue, and, when users are ready recording messages, or the “conductor” feels like it, samples are pushed on to each session's queue. (The queue is a priority queue, with the samples pushed by the conductor taking priority over the user-generated recordings.) Samples are always heard in its entirety, and the next sample begins when the previous has ended. At any time, users can press “1” to start a recording. After hearing a short message and a beep, they can record their own message, ending with pressing “1” again.

Version 2 added a conference feature: When a session's queue is empty, the caller is put in the “conference” state. Basically, he is transferred to a conference room, but his audio is muted, so he can only listen. This is the place where the conductor can decide to use his live phone to speak to the persons currently in the conference. Whenever there are files pushed to the queues of the listeners, they get transferred back into “play” state.

Web interface

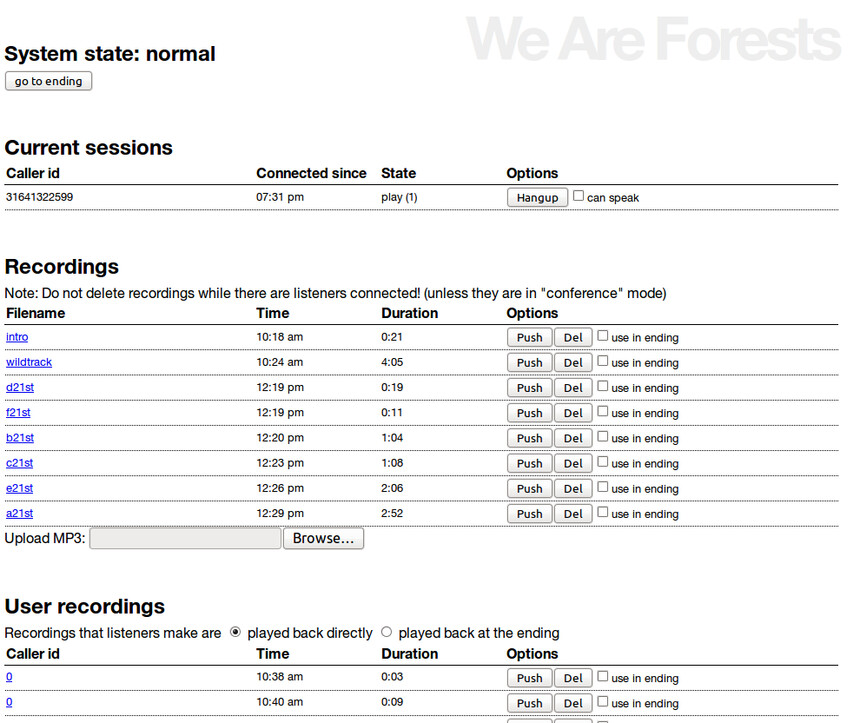

Besides adding the conference room, the second iteration of the project added a real-time web interface to monitor the state of the application and of each caller.

This web interface is designed for use by the conductor, and sports the following features:

Viewing the connected callers plus their state

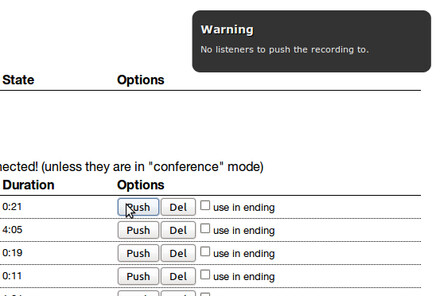

Pushing recordings to each session's queue

(un)muting a caller in the conference, so the phone can be used to put live audio through

Disconnect a caller (for instance, a spammer)

The ability to prepare a new performance by uploading new recordings (mp3) and deleting old ones

Dial a list of numbers to start the performance

This last feature, dialing a list of numbers to start the performance, has been put in to allow people to participate without them having to pay for the phone costs.

Software

By the way, the software is open source, released under the MIT license, and is available on Github. There are two repositories there, but the “weareforests” is the main repository, containing the program logic based on the Sparked framework (more on the technologies used in a next post!):

https://github.com/weareforests/weareforests

All in all, the system as it works now is fairly complete and I hope that the first test, which is tomorrow in the great market hall in Budapest, will go well! And a thank you to NIMk, for supporting this amazing project!

« Previous article Next article »